In today’s digital world, data is being created at lightning speed—from smart devices, apps, IoT sensors, vehicles, and more. But where should all that data go? And how quickly should it be processed?

Two major technologies are shaping this conversation: cloud computing and edge computing.

If you’re confused about the difference or wondering which one makes sense for your business or project in 2025, this guide is for you.

What Is Cloud Computing?

Cloud computing delivers computing services—like storage, servers, databases, and software—over the internet (“the cloud”). Instead of relying on your local computer or a private data center, cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud host your data and applications on their servers.

Benefits of cloud computing:

- Centralized data processing and management

- Easy scalability (up or down)

- Lower upfront costs (pay-as-you-go model)

- Global accessibility

- Automated updates and maintenance

💡 Example: Streaming platforms like Netflix or Google Drive rely heavily on cloud computing to deliver content to millions.

What Is Edge Computing?

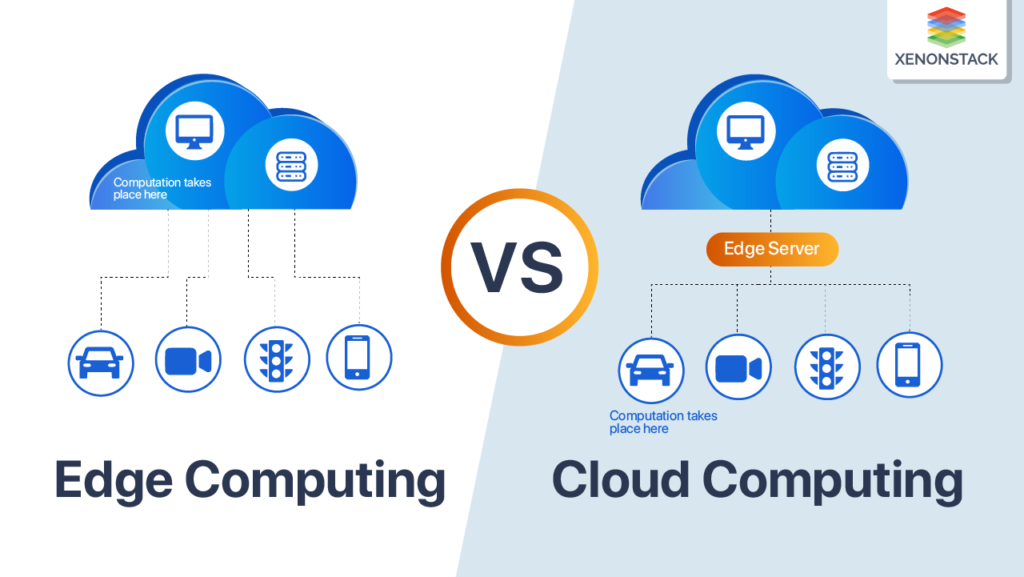

Edge computing pushes data processing closer to the “edge” of the network—near the source of the data (like a device, sensor, or machine), instead of a centralized cloud server.

Think of it like this:

Rather than sending everything to a remote cloud for processing, edge computing handles some tasks locally or at nearby mini data centers.

Benefits of edge computing:

- Ultra-low latency (faster response time)

- Better performance for real-time applications

- Reduced bandwidth usage

- Enhanced data privacy and security

- Offline capabilities (process data even without internet)

💡 Example: Self-driving cars use edge computing to process data instantly and make split-second decisions—because waiting for cloud responses isn’t fast enough.

Key Differences Between Edge and Cloud Computing

| Feature | Cloud Computing | Edge Computing |

|---|---|---|

| Location of Processing | Centralized (data centers) | Local (devices or nearby servers) |

| Latency | Higher (due to distance) | Very low (real-time responses) |

| Use Case | General applications, storage, analytics | Time-sensitive or remote environments |

| Scalability | Highly scalable | Limited to physical device capabilities |

| Internet Dependency | Requires strong internet | Can function with limited/no internet |

| Security | Depends on centralized cloud provider | Offers localized data protection |

Use Cases: When to Use Each

✔️ Use Cloud Computing For:

- Hosting websites or apps

- Running enterprise software (CRM, ERP)

- Data analytics and AI training

- Managing big data archives

- SaaS products (like Zoom, Dropbox, etc.)

✔️ Use Edge Computing For:

- Smart cities and traffic control systems

- Industrial automation and predictive maintenance

- Augmented reality (AR) and virtual reality (VR)

- Remote health monitoring

- Autonomous vehicles and drones

Cloud + Edge = The Best of Both Worlds

In 2025, businesses aren’t choosing between cloud and edge—they’re combining both. This hybrid approach is called “distributed computing.”

Example: A manufacturing company might use edge devices on the factory floor to monitor equipment in real time, while syncing that data with the cloud for large-scale analytics and long-term storage.

This combo delivers speed, flexibility, and powerful insights—all while minimizing costs and downtime.

Final Thoughts

The future of computing isn’t just in the cloud—or just at the edge. It’s in smart integration.

- Cloud computing is still essential for large-scale data storage, analytics, and global access.

- Edge computing is crucial for real-time decision-making, especially when speed, privacy, or connectivity matters.

As tech advances in 2025, understanding both is key to building smarter, faster, and more resilient systems.